Gliush Notebook

Notes about IT, work, and life

Notes for episode-0251

New approach could sink floating point computation (Posits)

https://www.nextplatform.com/2019/07/08/new-approach-could-sink-floating-point-computation/

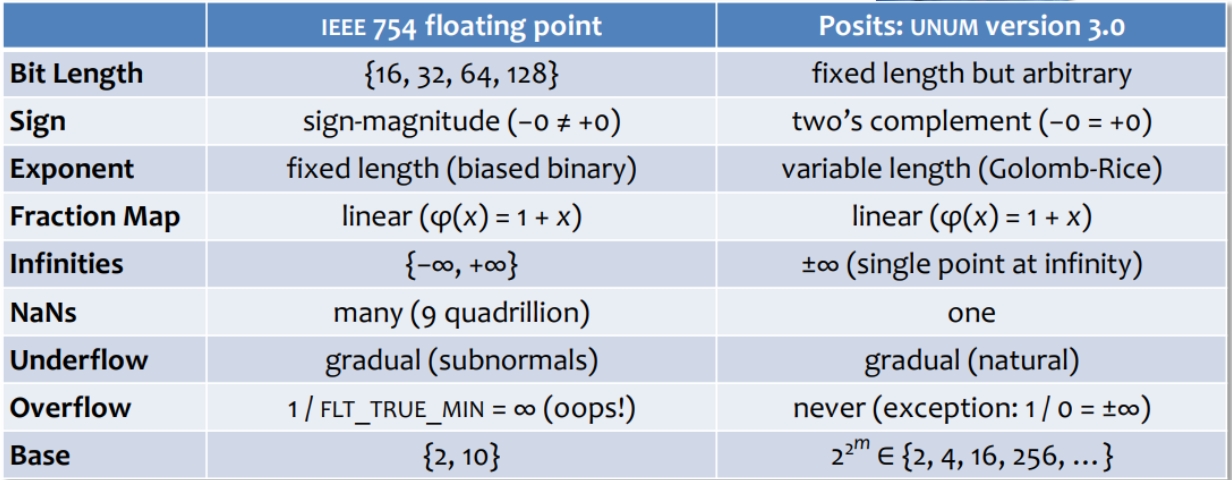

- float was designed in 1985 by IEEE.

- Performance and accuracy is not the best

- John Gustafson reworked the “float” idea (his 3d iteration) -> Posits

- Difference: denser representation of real numbers.

- Instead of fixed-size exponent and mantissa -> variadic representation

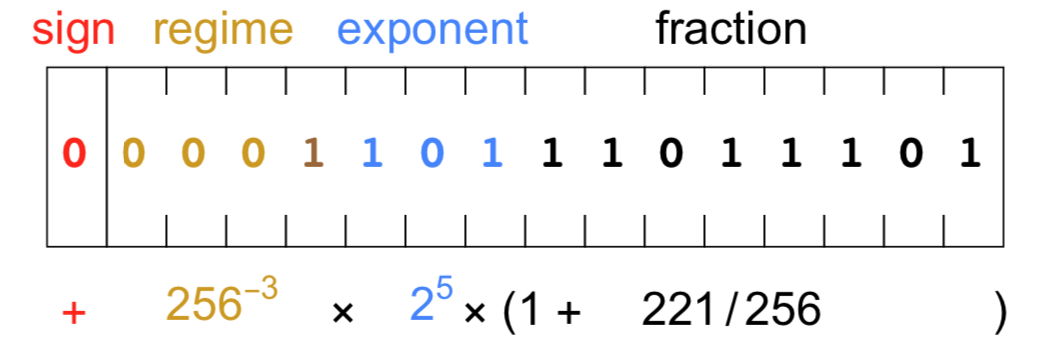

- Additional “regime” part, of ANY length

- Coded as “total number of bits + max number of bits for exponent”:

posit< bits, es > - Priority: sign -> regime -> exponent (up to es bits, =0 if no bits available) -> fraction (=1.0 if no bits available)

- Advantages:

- +∞ = -∞

- only one NAN (1/∞)

- Higher precision (32bit posits can replace 64bit float in almost all use cases!)

- Higher performance

- IMHO

- Seems to be a better variant then IEEE float from 1985

- Not sure that it can’t be improved

- Very difficult to support in hardware -> I’d RFC it first