Gliush Notebook

Notes about IT, work, and life

Notes for episode-0212

Netflix’s Edge Load balancing

https://medium.com/netflix-techblog/netflix-edge-load-balancing-695308b5548c

- Goal: very high load. Even small fraction of errors - problem

- All servers are overloaded - problem. Usually, only a subset (just started, GC, hardware problem)

- Guiding principles:

- avoid distributed state

- avoid client-side configuration:

- not the same team -> problems

- server side update -> problems

- instead of static thresholds use adaptive mechanisms as func(traffic, performance, env)

- Load-balancing approach

- choice-of-2-algorithm to choose between the servers

- load-balancer’s view of the servers utilization

- servers’ view of the servers utilization

- “probation” and “server-age” mechanisms to avoid overloading newly started servers

- Server-Reported Utilization

- Actively report

- Passively report (addition health-check calls might needed)

- Choice-of-2 instead of RoundRobin/JoinSmallesQueue

- Client Health: errors for that server

- Server Utilization: most recent score provided by the server

- Client Utilization: number of inflight requests to the server from the LB

- FIRST: Filter out servers with high utilization and health

- for each request (agains stale filtering result)

- best-effor by making N attempts to find anything, if not - without filtering

- helps with large amount of problematic servers (not to choose between 2 broken servers)

- Probation:

- for new servers allow 1 request in-flight to gather stats

- Stats Decay:

- Linear decay over 30 secs to flush all the stats

- RESULTS

- Slower Servers Receive Less Traffic

- canary deployment won’t show degradation! :)

- Outlier detection is less effective

- some CPU/RPS combination should be used

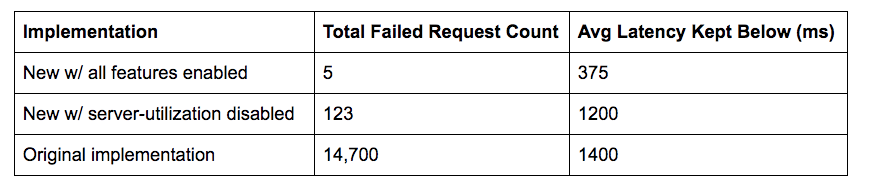

- Synthetic load-test results

- orders of magnitude reduction in load-shedding and connection errors compared to the round-robin

- 3x reduction in average and long-tail latency

- server-utilization feature added 10x error redution

- Impact on real production traffic

- Routing around intermittently and persistently degraded servers => avoid prod problems, do not need to wake eng up in the night (graphs)

- GC events - doesn’t harm anymore

Big Hack: How China Used a Tiny Chip to Infiltrate U.S. Companies

- AWS wanted to acquire a startup “Elemental” (software for compressing massive video files)

- AWS hired 3-party company to check Elemental’s security (to help with due-diligence)

- Problem

- SuperMicro assemble servers for Elemental, sent several servers to the company to check

- Found microchip (~ size of the grain of rice)

- The chip allowed the attackers to create a stealth doorway into any network included the server

- The chip was inserted during manufacturing

- 17 people confirmed the manipulation of Supermicro’s hardware and other elements of the attacks

- “the microchip altered the operating system’s core, so it could accept modifications. The chip could also contact computers controlled by the attackers in search of further instructions and code”

Kubernetes-1.12

- Kubelet TLS Bootstrap goes General Availability (GA) (автоматизация добавления kubelet в TLS-защищенный кластер, никакой ручной работы не требуется)

- Azure Virtual Machine Scale Sets (VMSS) and Cluster-Autoscaler is now Stable

- Arbitrary / CustomMetrics in the HorisontalPodAutoscaler is moving to a second beta

- HPA to reach proper size faster (no cooldown for scale up event, but still cooldown for scale down event)

- Vertical Scaling of Pods - in beta